Complete guide to Agent Zero autonomous AI framework. Get setup guides, GitHub links, and technical implementation details for Linux-based autonomous development workflows.

Getting Started with Agent Zero GitHub

For developers ready to implement autonomous AI, here’s how to get started with the main Agent Zero framework:

Quick Setup with Docker

Get Agent Zero running in under 2 minutes with Docker. This is the fastest way to experience autonomous AI in action:

# Pull the official Agent Zero Docker image

docker pull agent0ai/agent-zero

# Run Agent Zero with web interface

docker run -p 50001:80 agent0ai/agent-zero

# Access via browser - Agent Zero will be ready at:

# http://localhost:50001Important Security Note: Agent Zero has full system access within its container. Always run in isolated environments like Docker. As the official documentation states: “Agent Zero Can Be Dangerous!” – use responsibly.

Local Development Installation

# Clone the repository

git clone https://github.com/agent0ai/agent-zero.git

cd agent-zero

# Install dependencies

pip install -r requirements.txt

# Configure environment

cp .env.example .env

# Edit .env with your API keys

# Run locally

python run.pyEssential Configuration

Agent Zero requires API access to language models. Configure your models.yaml and providers.yaml for:

- OpenAI GPT-4 or Claude models for reasoning

- Local models via Ollama for privacy-focused deployments

- Azure OpenAI for enterprise environments

Persistent Development Sessions

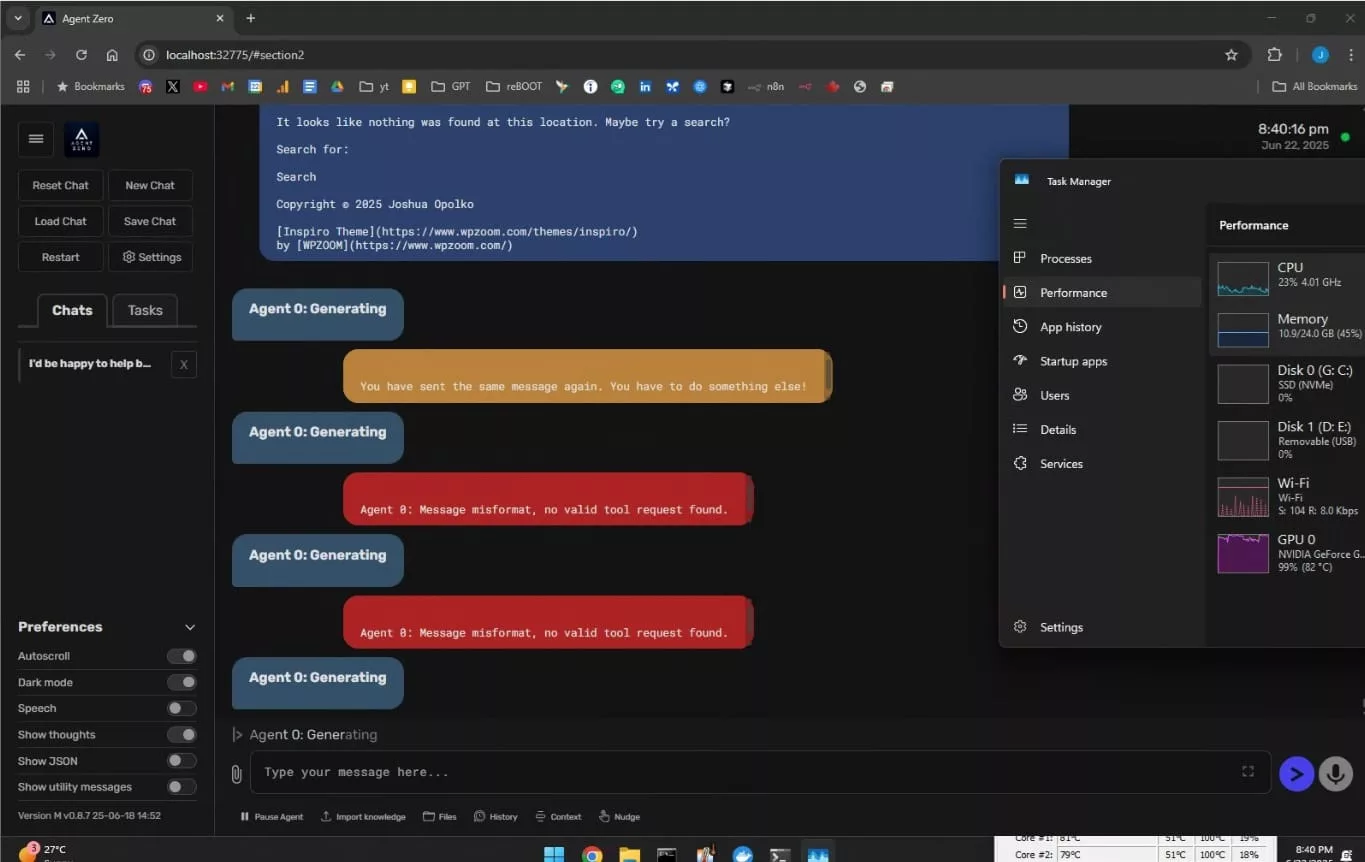

Agent Zero’s persistent memory system enables continuous development workflows where context and progress persist across interactions. Unlike session-based AI assistants, Agent Zero maintains:

- Completed task history and successful patterns

- Failed approaches and debugging insights

- Project-specific configurations and preferences

- Custom tools and utilities developed during sessions

This persistent memory architecture distinguishes Agent Zero from other AI frameworks by enabling true autonomous learning and adaptation within projects. The system builds institutional knowledge over time, becoming more effective at handling similar challenges.

What You Can Build with Agent Zero

Agent Zero creates its own tools dynamically, enabling sophisticated autonomous workflows. Here are real use cases that motivate developers to adopt the framework:

Software Development Projects

- Full-Stack Applications: Agent Zero creates complete web apps, from database schema to frontend, with comprehensive testing

- Code Refactoring: Automatically modernize codebases, update dependencies, and optimize performance across multiple files

- Bug Investigation: Trace through logs, reproduce issues, and implement fixes autonomously with root cause analysis

- API Integration: Research documentation, write integration code, create tests, and generate usage examples

System Administration & DevOps

- Server Monitoring: Create custom monitoring dashboards, analyze performance metrics, and optimize configurations

- Deployment Automation: Build CI/CD pipelines, configure infrastructure, and manage containerized applications

- Security Audits: Scan systems for vulnerabilities, analyze logs for threats, and implement security measures

Research & Content Creation

- Market Research: Gather data from multiple sources, analyze trends, and create comprehensive business reports

- Technical Documentation: Generate API docs, user guides, and tutorials with working code examples

- Competitive Analysis: Research competitors, analyze their strategies, and identify market opportunities

Try Agent Zero Right Now

Ready to experience autonomous AI? Join the community and start building:

- Discord Community: Join active discussions with other Agent Zero developers

- GitHub Repository: Star the project and explore the source code

- Quick Start: Use the Docker setup above to have Agent Zero running in 2 minutes

The Agentic AI Revolution

The agentic AI market is projected to reach $45 billion in 2025, with Gartner forecasting that 33% of enterprise software will incorporate agentic AI by 2028—up from less than 1% in 2024. Agent Zero eliminates the gap between AI suggestions and execution by operating in actual Linux environments where it installs packages, executes scripts, and manages complete development workflows. Unlike LangChain or AutoGPT, Agent Zero prioritizes radical customization—almost nothing is hard-coded, and all behavior stems from modifiable system prompts.

Technical Architecture: Dynamic Multi-Agent Hierarchy

Agent Zero employs hierarchical multi-agent architecture where each agent reports to a superior and spawns subordinate agents for subtask delegation. The framework leverages LiteLLM for multi-provider LLM support including OpenRouter, OpenAI, Azure, Anthropic, and local models configured via models.yaml and providers.yaml files.

Core Capabilities

- Code execution and full terminal access with arbitrary command execution

- Persistent memory with AI-filtered recall and automatic consolidation

- Real-time streaming output with mid-execution user intervention

- Model Context Protocol (MCP) server and client support

- Agent-to-Agent (A2A) protocol for inter-agent communication

- Browser automation, document Q&A with RAG, and speech integration

The Computer-as-Tool Paradigm

Agent Zero’s philosophy differs fundamentally through its “computer-as-tool” approach. Rather than predetermined single-purpose tools, agents dynamically write their own code using terminal access. This contrasts with frameworks like CrewAI, which excel at role-based execution within constrained toolsets. The framework implements the ReAct (Reasoning and Acting) pattern, enabling agents to generate human-interpretable task-solving trajectories that address hallucination, brittleness, and coordination failures plaguing first-generation autonomous systems.

Protocol Integration: MCP and A2A

Model Context Protocol (MCP)

MCP, released in late 2024 with OpenAI support in March 2025, provides standardized interfaces for connecting AI models to external data sources. Unlike RAG which treats queries in isolation, MCP tracks user preferences, prior conversations, and facts across sessions—enabling genuinely personalized experiences. Agent Zero’s MCP integration facilitates structured reasoning, composability, and clean tool integration ideal for complex enterprise systems. For developers looking to leverage MCP in structured development workflows, Claude Code’s specification-driven approach demonstrates how MCP transforms complex feature development through systematic requirements analysis and implementation.

Agent-to-Agent Protocol (A2A)

Google launched A2A on April 9, 2025, with support from 50+ partners including Salesforce and MongoDB. The Linux Foundation formalized A2A on June 23, 2025 as the standard for secure agent-to-agent communication. Built on HTTP, SSE, and JSON-RPC, A2A enables capability discovery through “Agent Cards,” task lifecycle management, context sharing, and UI capability negotiation—allowing Agent Zero’s hierarchical agents to coordinate complex workflows across vendor ecosystems. Real-world implementations like JOSIE demonstrate how autonomous AI systems combine MCP and A2A protocols for advanced workflow automation with persistent memory.

Real-World Applications

Gartner predicts 25% of companies using generative AI will launch agentic AI pilots in 2025, growing to 50% by 2027. Real-world implementations demonstrate maturity: Genentech built an agentic solution on AWS Bedrock automating biopharmaceutical research workflows. Bank of America‘s Erica handles millions of monthly customer interactions. Agent Zero’s architecture supports dataset loading, statistical analysis, visualization generation, development environment setup, automated testing, security scanning, and compliance reporting—all with automatic dependency resolution.

Security Architecture: Docker Isolation

Agent Zero requires robust isolation due to system-level execution capabilities. Modern 2025 security implements: container isolation in secure Docker containers, strict resource limits preventing exhaustion, network access controls, and pre-execution security policy analysis. Agent Zero’s Docker-based architecture aligns with enterprise standards like AWS Bedrock AgentCore Code Interpreter, which provides dedicated sandbox environments with complete workload isolation.

Comparative Analysis: Agent Zero vs. Industry Leaders

LangChain (30% market share): Excels in structured, reliable systems with deterministic outputs and robust error handling—ideal for enterprise-grade production systems.

AutoGPT (25% market share): Emphasizes goal-oriented autonomy with real-time internet access and self-modification. Over 70% of developers prefer AutoGPT for autonomous code generation projects.

CrewAI (20% market share): Simplifies collaborative task execution with well-defined roles and team-oriented workflows.

Agent Zero’s Differentiation

- Agent-created dynamic tools vs. predefined static tools

- Prompt-based customization with no hard-coded rails

- Persistent memory with AI consolidation vs. session-based memory

- Hierarchical multi-agent architecture with role profiles

- Transparent open-source internals vs. black-box operations

Memory Systems: Beyond Session-Based Context

Agent Zero’s persistent memory with AI-filtered recall and automatic consolidation maintains state across sessions, tracks completed tasks, and learns from previous successes and failures. Version v0.9.6 introduces a memory dashboard for visualization and management, secrets management for secure credentials, and enhanced AI-powered filtering. This architecture aligns with emerging MCP capabilities, distinguishing Level 3+ autonomous systems from simpler implementations.

Why Agent Zero Matters

The gap between “AI suggests a solution” and “solution actually works” has historically been massive. Agent Zero closes that loop through genuine autonomous execution: setup, testing, and iteration without human translation. When errors occur, the system adapts and retries autonomously. That’s the innovation: AI that learns from mistakes within single tasks, not just across training datasets. As the agentic AI market accelerates toward $45 billion in 2025, frameworks like Agent Zero represent the technical foundation for next-generation autonomous systems executing complete workflows from conception to deployment.

Resources

- Agent Zero GitHub Repository – Official agent0ai repository and comprehensive documentation

- Model Context Protocol Documentation – Anthropic’s MCP standard and implementation guide

- Agent-to-Agent Protocol (A2A) – Google’s inter-agent communication standard

- LangChain Documentation – Leading AI agent orchestration framework

- AutoGPT Project – Autonomous GPT-4 agent framework

- ReAct: Synergizing Reasoning and Acting in Language Models – Research paper on ReAct pattern

- AWS Bedrock AgentCore – Enterprise AI agent infrastructure

- Gartner Agentic AI Research – Industry forecasts and adoption trends

Last updated: January 2025