Build an uncensored AI assistant with persistent memory using n8n automation, vector databases, and privacy-focused web search

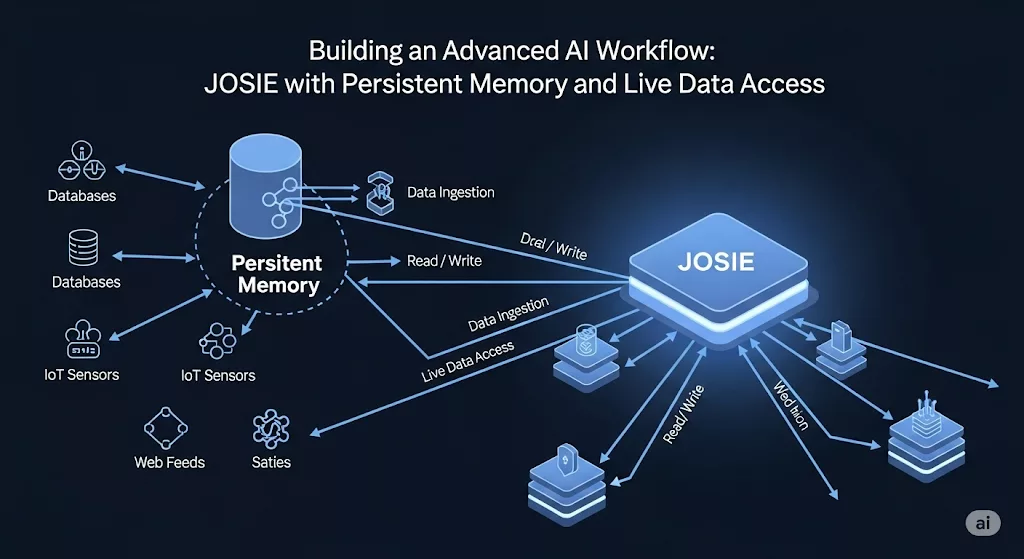

Traditional AI assistants lose memory between sessions and have limited web access. JOSIE solves both problems with a three-tier architecture using open-source tools: n8n workflow automation, Qdrant vector database for persistent memory, and SearXNG for unlimited web search—with complete data privacy.

Architecture Overview

JOSIE’s architecture demonstrates how modern autonomous AI systems combine multiple protocols and components. Similar to Agent Zero’s approach to multi-agent hierarchies and protocol integration, JOSIE leverages standardized interfaces for tool integration and workflow coordination.

Component 1: JOSIEFIED-Qwen3:8b Language Model

Built on Alibaba’s Qwen3 with 8.19B parameters and 40K context window:

- Advanced Intelligence – Superior reasoning on Qwen3 architecture

- Extended Context – 40K tokens for lengthy documents and conversations

- Tool Integration – External API support with reduced filtering

- Local Processing – Complete privacy via Ollama, which enables running powerful AI models on your own hardware

Component 2: Qdrant Vector Database for Persistent Memory

Qdrant creates persistent memory through semantic search:

- Document Processing – PDF uploads with 200-character chunks, 50-character overlap using mxbai-embed-large:335m embeddings

- Conversation Memory – Every interaction stored with rich metadata via LangChain

- Advanced Retrieval – Context retrieval (top-K: 3) for documents, memory retrieval (top-K: 50) for conversations

- Optimized Storage – Vector quantization reduces RAM by 97% with sub-second searches

Component 3: SearXNG Privacy-Focused Web Search

SearXNG provides unlimited, privacy-focused web search:

- Unlimited Queries – No rate limits or API costs

- Privacy Protection – Zero tracking, no data retention

- Multi-Engine – Aggregates Google, Bing, DuckDuckGo results

- JSON API – Clean integration for AI workflows

Key Workflow Features

- Smart Tool Selection – Automatic web search, memory retrieval, or document access based on query context using n8n conditional logic

- Session Management – Persistent conversations with analytics via n8n execution data

- Error Handling – Graceful degradation using n8n error workflows

Real-World Applications

Personal Knowledge Management: Upload PDFs, query conversationally, maintain searchable interaction history for research and learning.

Business Intelligence: Maintain project context, customer support memory, combine stored knowledge with live web data for informed decision-making.

Education & Research: Track learning progress, access academic papers, combine historical knowledge with current information.

Privacy & Security

JOSIE prioritizes user privacy through local processing and encrypted storage. For complete context on AI data privacy challenges and best practices, including GDPR compliance, consent frameworks, and privacy-preserving technologies, see our comprehensive guide on data protection in AI systems.

- Complete Control – Local AI processing, no data leaves your network

- GDPR Compliance – Air-gapped deployment options for sensitive data

- Enterprise Security – Isolated environment with comprehensive audit logs

Quick Setup Guide

Prerequisites: Docker, n8n, Ollama, Qdrant, SearXNG

Installation Steps:

- Deploy SearXNG via Docker compose

- Install Qdrant locally or cloud

- Configure JOSIEFIED-Qwen3:8b in Ollama

- Import n8n workflow template

Performance: Local processing, optimized storage, sub-second searches, Kubernetes-ready for production scaling.

Conclusion

JOSIE delivers privacy-preserving AI that grows smarter with every interaction. Combining advanced language models, persistent memory, and unlimited web access creates autonomous assistance without vendor lock-in. Start with the n8n documentation and explore AI workflow automation.

Essential Resources

- n8n Workflow Automation – Open-source workflow automation platform

- Qdrant Vector Database – High-performance vector similarity search

- Ollama Local AI – Run large language models locally

- SearXNG Metasearch – Privacy-respecting search engine

- LangChain – Framework for building LLM applications

- Qwen Models – Alibaba open-source language models

Last updated: January 2025